New releases

Stay visible. Boost performance.

See what's new →

See what’s new →

A VP of Marketing believed their team could achieve greater ROI by adding a human component to their mobile shopping experience. They hypothesized that a conversational experience would drive more purchases when compared to one-way messages.

The hypothesis proved correct as they achieved a 24% CVR lift when compared to one-way messages. This also translated into an 18x ROI for the brand.

Those are numbers marketing teams dream of reporting. But these wins were only possible because of A/B testing.

It was a similar story for JAXXON who ran an A/B test on one of their holiday campaigns. The results speak for themselves, as they saw a 249% increase in overall ROI and a 138% higher CTR. One of the interesting insights from this test was that adding three more sends to their campaign achieved these results.

A/B testing is not just for immediate optimization. It’s also for informing future marketing decisions based on precise audience preferences and behaviors.

- Ricky Hagen, Retention Marketing Manager, JAXXON

A/B testing has helped Attentive customers achieve:

Like JAXXON, another brand also earned 3.3x more revenue with three additional message sends during a holiday campaign—and saw a 21% increase in CTR even with the increase in messages. It seems counterintuitive, but that’s what A/B testing is for. Without running these tests, these brands would never have the data to back up changes to their SMS and email marketing strategies.

By comparing the performance of two variations of a campaign, you can gain valuable insights into which strategies, designs, or messages are most effective. This process helps eliminate guesswork, optimize resources, and ultimately drive better engagement.

Before you start running A/B tests, let’s review a few best practices from our team—having worked with more than 8,000 brands:

Not only should you test different hypotheses to remove guesswork and gain more insights, but define your hypothesis and metrics clearly upfront. Don't be afraid to experiment with different types of tests, like creative changes or different types of incentives. Experiment regularly. Even if you've tried a test historically, it's always a good idea to re-test it as consumer buying behavior is constantly evolving. For example, we use the Bayesian statistics model to measure the probability that observed differences are significant, ensuring reliable and unbiased results. This provides a clear view of customer cohorts.

- Anca Filip, Head of Product, Mention Me

Keep track of your A/B tests and their results with our downloadable A/B Testing Template—perfect for tracking your tests across both SMS and email marketing.

Now that we’ve talked about what goes into A/B testing in SMS and email, let’s look at some examples.

Here are some A/B testing ideas and examples to help you get started:

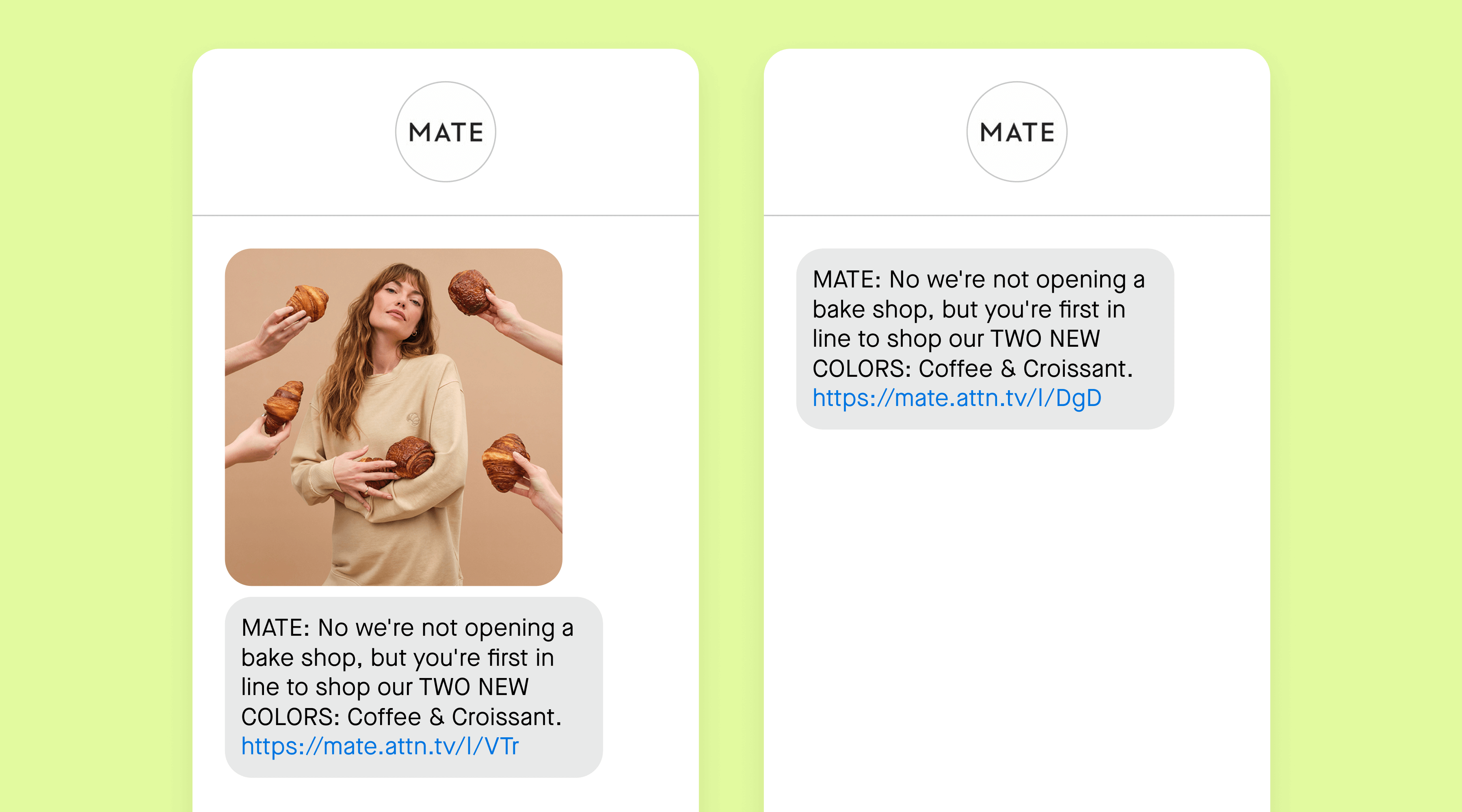

Incorporating multimedia—like images, GIFs, video, or audio—is a fun way to add color to your text messages. But maybe your customers are more likely to engage with text-only messages (i.e., SMS vs. MMS). This is one of the first and easiest A/B tests you can run to see which type of message works better for your audience.

Remember to keep the copy the same for each variation, so you’re only comparing the effectiveness of including an image vs. not including one.

If you’ve determined that your subscribers are more likely to engage with messages that include visuals, the next step is to test different types of images to see which ones convert best. For example, you could include a product close-up in one variant, an image of a model in another variant, and a lifestyle image in a third variant—using the same copy in each one.

You can also run A/B tests to see if using static images or GIFs and audio or video have more of an impact on click-through and conversion rates. Or, if adding text over an image (e.g., to reinforce a limited-time sale or offer) performs better than the same image without the text added.

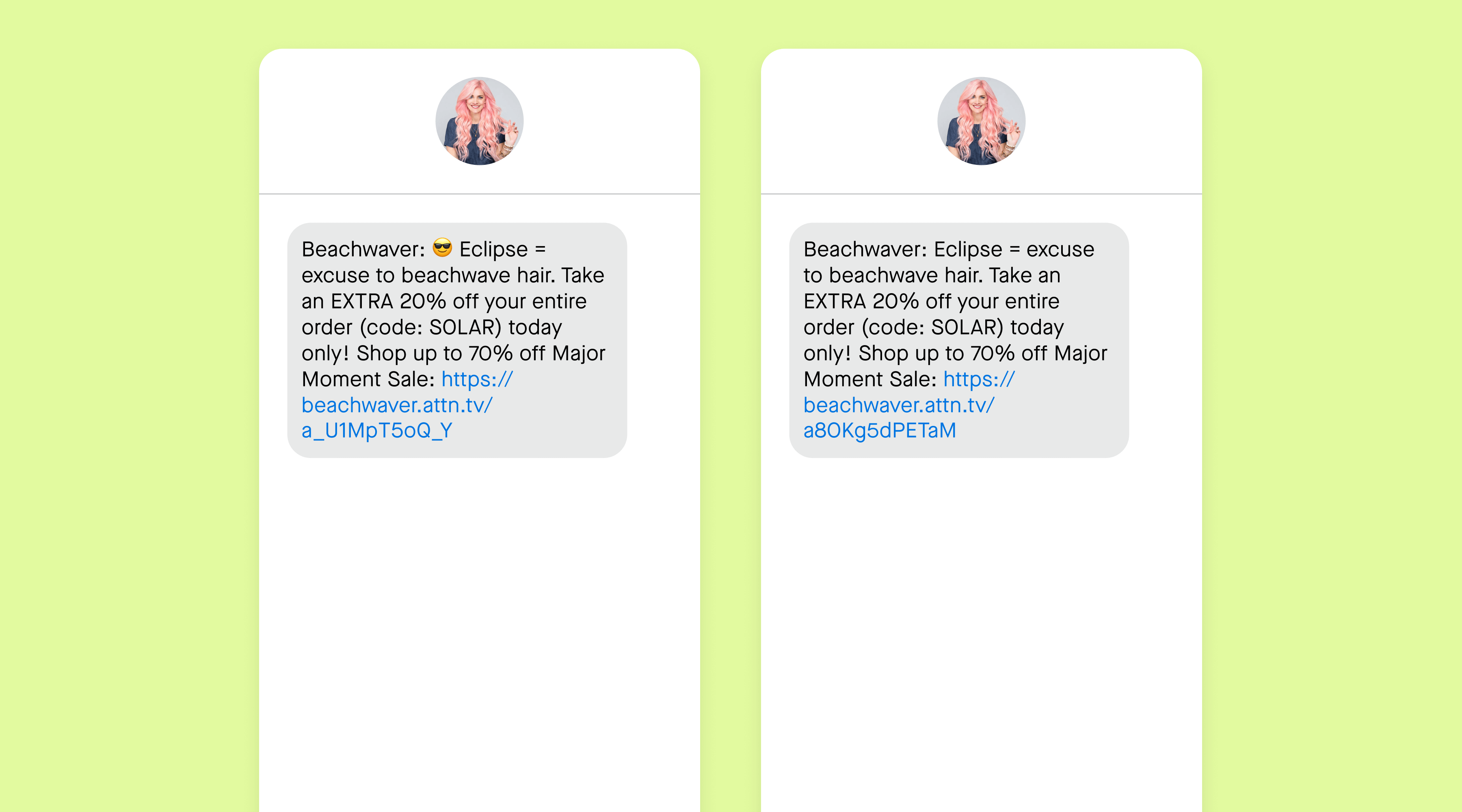

Emojis can be another great way to add personality to your text messages. While we typically recommend using emojis sparingly—and only when they add value to the message—every audience is different, so it’s important to test what works best for yours.

To see if emojis are effective in your SMS campaigns, start by running an A/B test comparing messages with and without emojis. If you find that customers respond positively to messages with emojis, then you can run additional tests to figure out the ideal number of emojis to use per message and which emojis get more engagement.

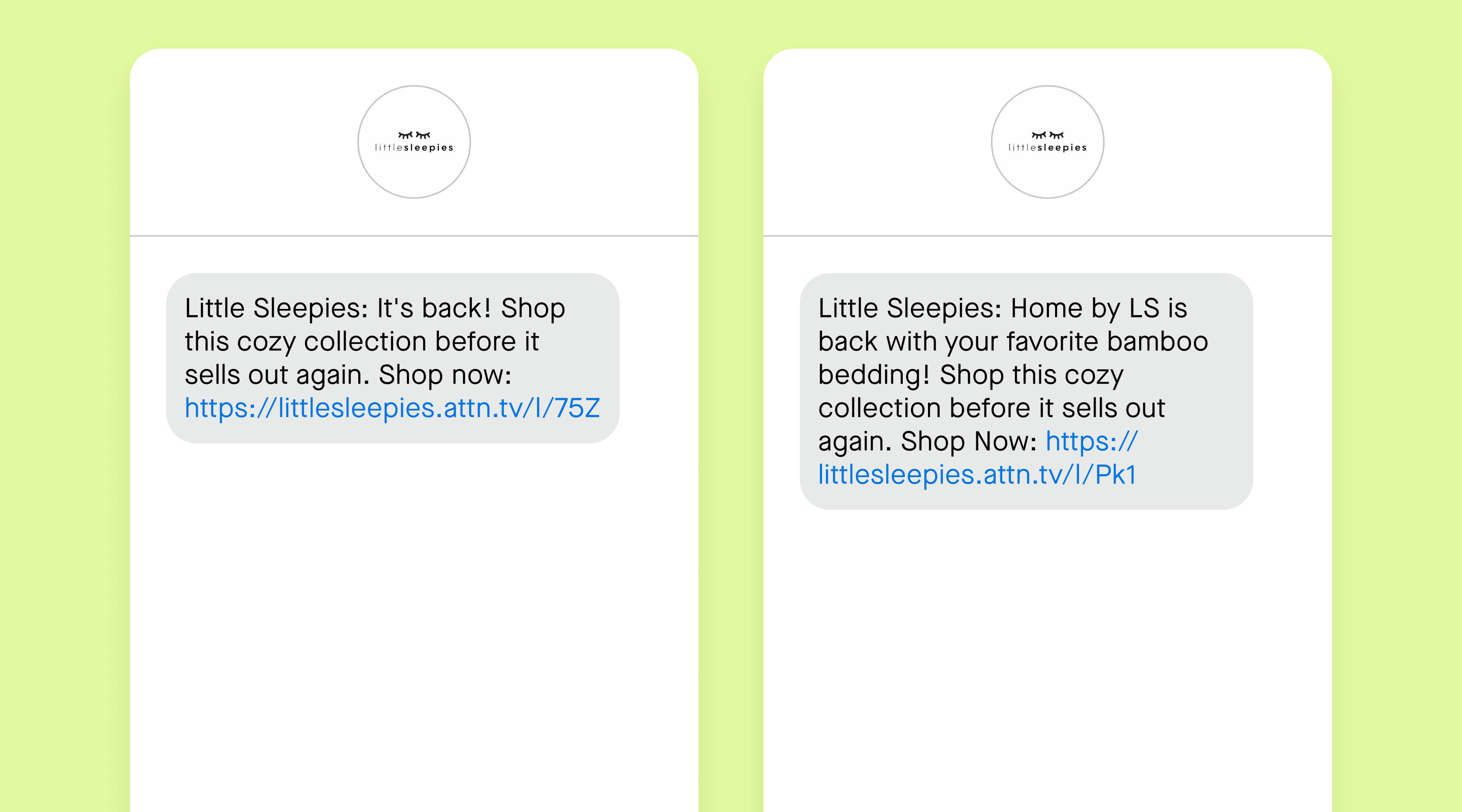

Keeping your text message copy between 75-115 characters (or 3-4 lines long) is a good rule of thumb, but you can run tests to see if your subscribers prefer shorter or longer messages. Try to keep the copy generally the same for both versions, using the shorter message copy as the foundation for your longer message, so you can accurately compare them.

Experiment with how you structure your messages, too. Do your subscribers prefer when you use no line breaks or many? Does it make a difference when you capitalize certain words (e.g., FREE, SALE) or keep the whole message in sentence case? Test these elements one at a time to fine-tune the format and length of your text messages.

Every text message you send should include a tracking link to your website, but where you place the link can affect how many people click it. Run tests to see if placing links near the top of your message, in the middle of your copy, or at the bottom of your message drives more conversions.

You should also experiment with directing subscribers to different pages on your website. For example, when you launch a new collection, does sending people to the full collection page or to the specific product page influence more purchases? You can do a similar test when promoting new arrivals. Try creating one variation where you direct people to your homepage and one where you link directly to your new arrivals page.

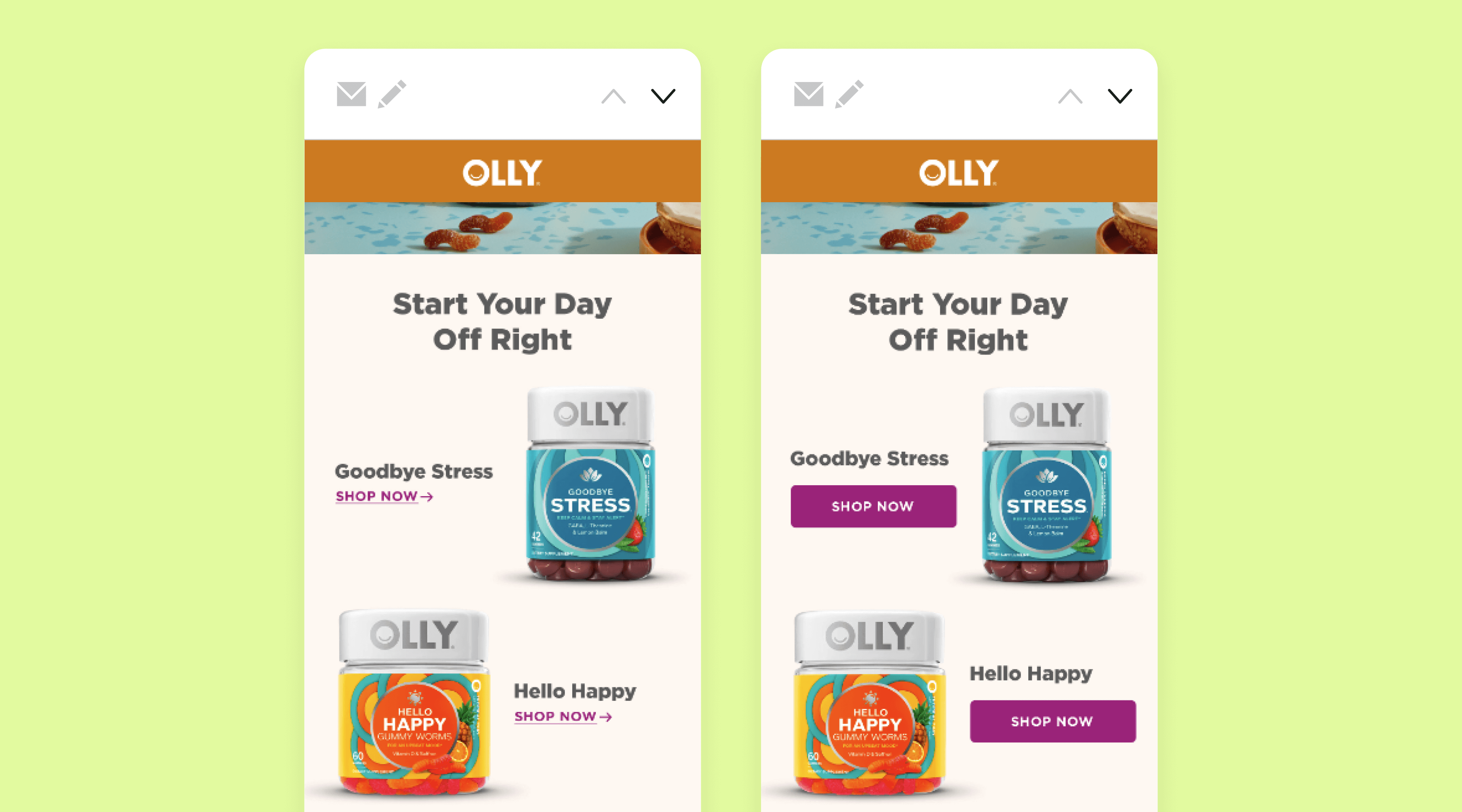

Ending your text messages with a clear call to action can help encourage subscribers to click through and shop immediately. Within your CTA, you could test the same words but see how a CTA in all caps performs.

You can test different short-and-sweet options (e.g., “shop now,” “click here,” or “ends soon") and capitizations (e.g., “Shop now” vs. “SHOP NOW”). Or, see if something more playful or descriptive works better (e.g., “What are you waiting for?” vs. “Shop now”).

We’ve found that consumers typically prefer dollar-off or percentage-off discounts, but do your customers prefer one over the other? Do they want other incentives like free shipping, loyalty benefits, and first access to new products drops? Run A/B tests across your campaigns and triggered messages to find out what drives the biggest impact for your brand.

You should also A/B test your email and SMS sign-up units to see if you convert more website visitors into subscribers with discount-based incentives or other kinds of offers.

In some cases, you might want to experiment with different copywriting approaches or positioning. For example, highlighting the benefits of specific items vs. using a fun play on words to garner interest, or leaning into FOMO to promote limited-stock items vs. positioning them as “back-in-stock” while supplies last.

Just remember to keep as many elements as possible the same in both versions—like the link and the image—since the copy is the variable you’re testing.

For some brands, sending text-only messages that get straight to the point may work better (e.g., “We’re having a sale. Shop now!”). For others, it might be more effective to use a casual tone of voice that reflects the personal nature of text messaging (e.g.,”Hi friend! Our new collection has your name written all over it. Treat yourself to something new.”).

One Attentive customer that tested their brand voice in SMS found a 15x ROI and their CEO and Co-Founder said, “Test every aspect of your tone to fine tune it and understand what resonates with your subscribers.”

Play around with different tones or styles until you find something that resonates with your subscribers and aligns with your brand voice. Or, test Brand Voice AI that generates brand-specific copy with models trained on your past, top-performing messages.

The key to scaling your SMS program is through A/B testing! It’s the only way to truly measure what your audience responds to. A test that marketers often overlook is something I like to call the “Address Test.” If you’re struggling with low click rates, you can experiment with how you address your recipients. Have you tried calling them by their first name? Or maybe your brand has such a loyal customer base you have a nickname for them, like how Lush Cosmetics calls their community “Lushies.” Test different ways you address your customers to see if your click rate can increase. This deeper level of personalization could be exactly what you need to drive those clicks!

- Keyshawna Johnson, Head of Retention Marketing, Avenue Z

This is also an area where you can play around with dynamic variables, such as the {firstName} macro, to see if your subscribers respond well to being addressed directly.

When’s the best time to send an SMS campaign? Your audience will have specific preferences, but a good place to start is by sending the same message at different times (i.e., in the morning, afternoon, and at night) and on different days of the week to see when your subscribers are most active. If you find that more people tend to open and engage with your messages at night, then you can get more granular and test the same message at hourly intervals (e.g., 5pm, 6pm, 7pm, 8pm). We’ve found if you want to optimize your revenue per send, weekdays are slightly better than weekends.

Keep in mind: Under the TCPA and related state laws, you can’t send text messages during “quiet hours.” Attentive's default and recommended Quiet Hours are 8pm to 12pm EST. If you use Attentive, we also recommend using our time zone-based message sending feature, which allows you to schedule and send messages based on a subscriber's local time zone.

You should also test the timing of your triggered messages to see how different wait times impact performance. For example, A/B test your abandoned cart reminders to send after one hour vs. three hours to see which one leads to more completed purchases. Or, experiment with sending post-purchase messages after 14 days vs. 30 days to figure out the best time to nudge recent shoppers to come back and buy again.

Pro tip: Optimize send by each subscriber. Attentive’s Send Time AI operates at the individual subscriber level. It analyzes factors such as time zone, past interactions, and purchasing habits to identify the perfect time to send a message to each subscriber. When testing Send Time AI, Boston Proper saw a 13% increase in CTR, a 10% increase in CVR, and a 10% increase in revenue per message.

Many A/B tests done on SMS can also apply to email marketing, such as testing images, copy variations, emojis, personalization, and CTAs.

But when comparing A/B tests between email marketing campaigns and SMS campaigns, there are some key factors to consider. Firstly, the engagement dynamics differ significantly—emails allow for more expansive content and visuals, while SMS messages are concise and immediate. This affects how audiences interact with and respond to each type of message.

The timing and frequency of communication are crucial; emails can be read at the recipient's leisure, whereas SMS messages often demand immediate attention.

Pro tip: If you’re testing the timing of your send, we’ve found that conversion rates peak between 1-5pm EST, and again around 8pm EST.

Also, the demographic and behavioral characteristics of the audience play a role—some segments may prefer or be more responsive to one channel over the other, influencing the effectiveness of A/B tests across different mediums.

Luckily for Attentive customers, you can A/B test both SMS and email messages in the same campaign. To make it even easier, we have an Autowinner feature that'll automatically pick the best message based on the winning criteria you select (click-through rate, conversion rate, total revenue, or unsubscribe rate).

Dive deeper into some best practices for using SMS and email together, including experimentation, by watching this quick video.

Now let’s cover some tests to run in your email programs.

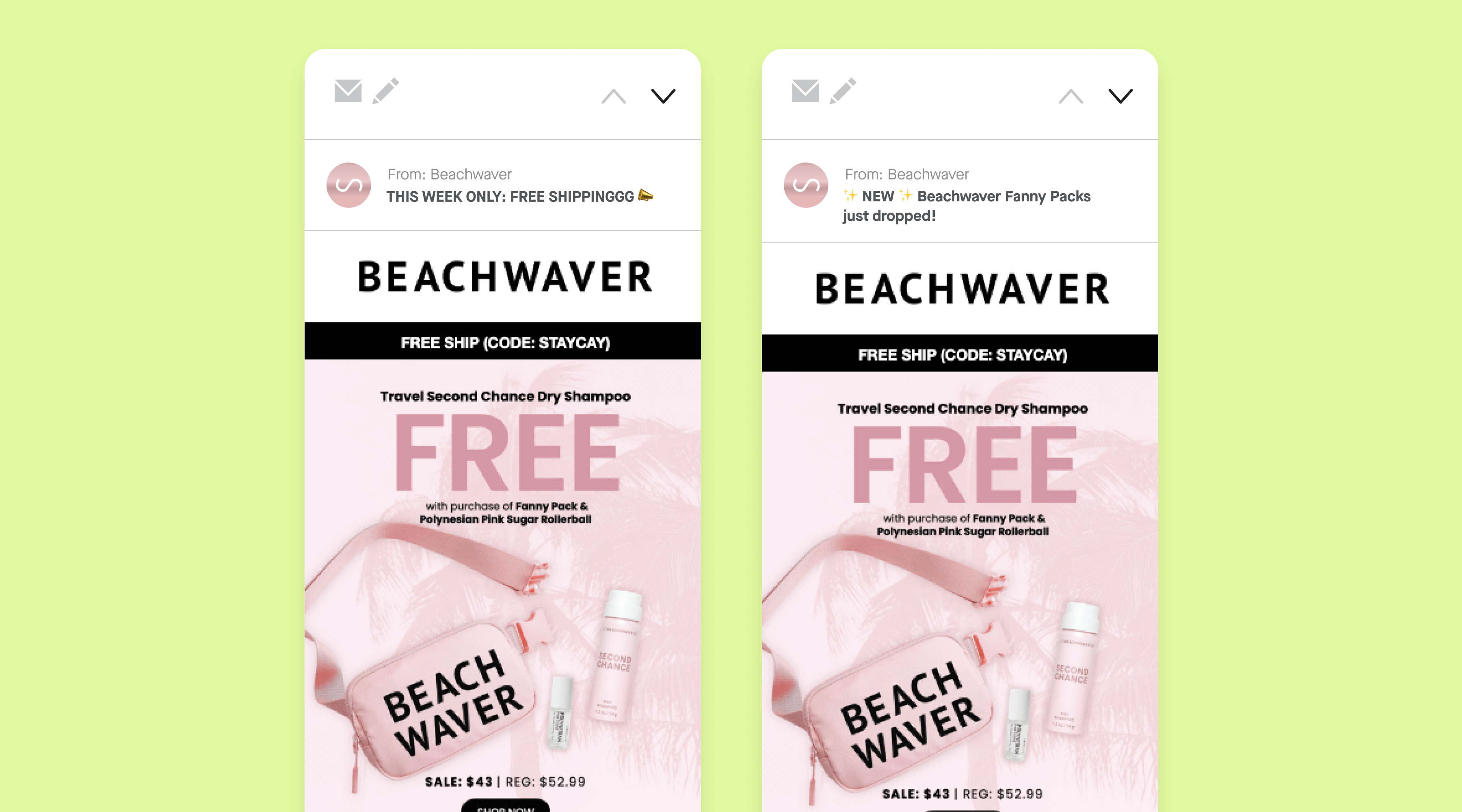

Your subject line is often the first thing subscribers see in their inbox—and it can make or break your open rates. By creating two variations of your subject line, you can gather data on which version performs better with your target audience. This could involve testing different lengths, using emojis, personalizing with the recipient's name, or experimenting with various tones and wording.

For example, you might compare a straightforward subject line like "Exclusive Offer: 20% Off Your Next Purchase" with a more playful one like "🎁 Unwrap Your 20% Discount Inside! 🎁".

We’ve found that medium-length subject lines (between 25-35 characters) drive the most opens, followed by short ones (fewer than 25 characters) for trigger-based emails.

Your subject line gets subscribers to open your email, but your email content is what gets them to engage. You can test various aspects of your email’s content, such as personalization, offers, social proof, sizing, or placement of images, images vs. videos, and messaging. We’ll drill down further into some of these specific tests below.

A/B testing is a powerful tool for any brand or lifecycle marketer, providing data-driven insights to optimize your marketing strategy and platform performance. For email marketing, one of our go-to strategies at Power Digital is testing dynamic versus static content—this can have notable impact on revenue. A commonly overlooked yet valuable test is assessing types of dynamic content, such as comparing "most viewed" items against "bestsellers" or "new arrivals" versus "bestsellers." Remember, consumer behavior is always evolving, so continuous retesting is essential for staying ahead.

- Melia Dion, Senior Lifecycle Strategist, Power Digital

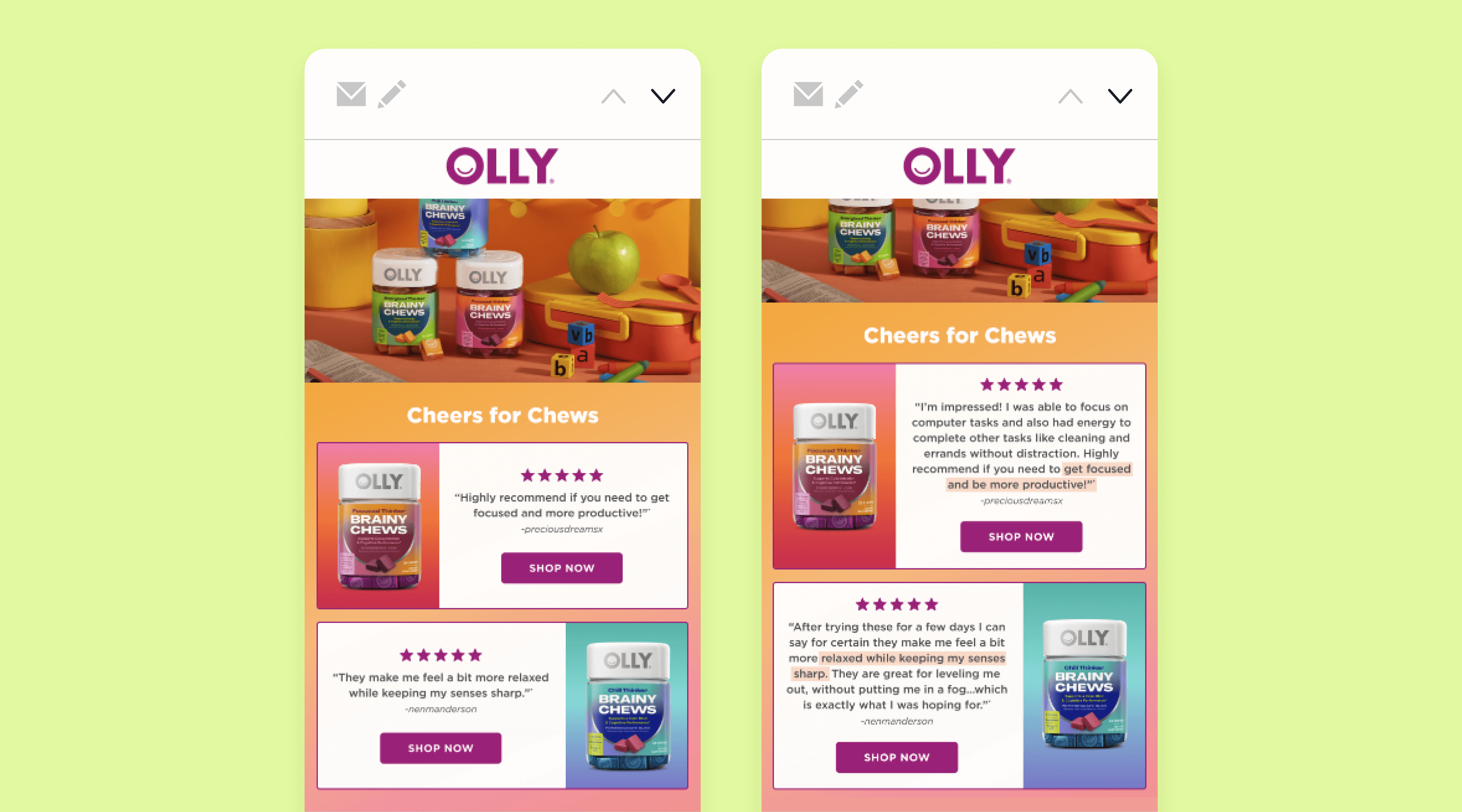

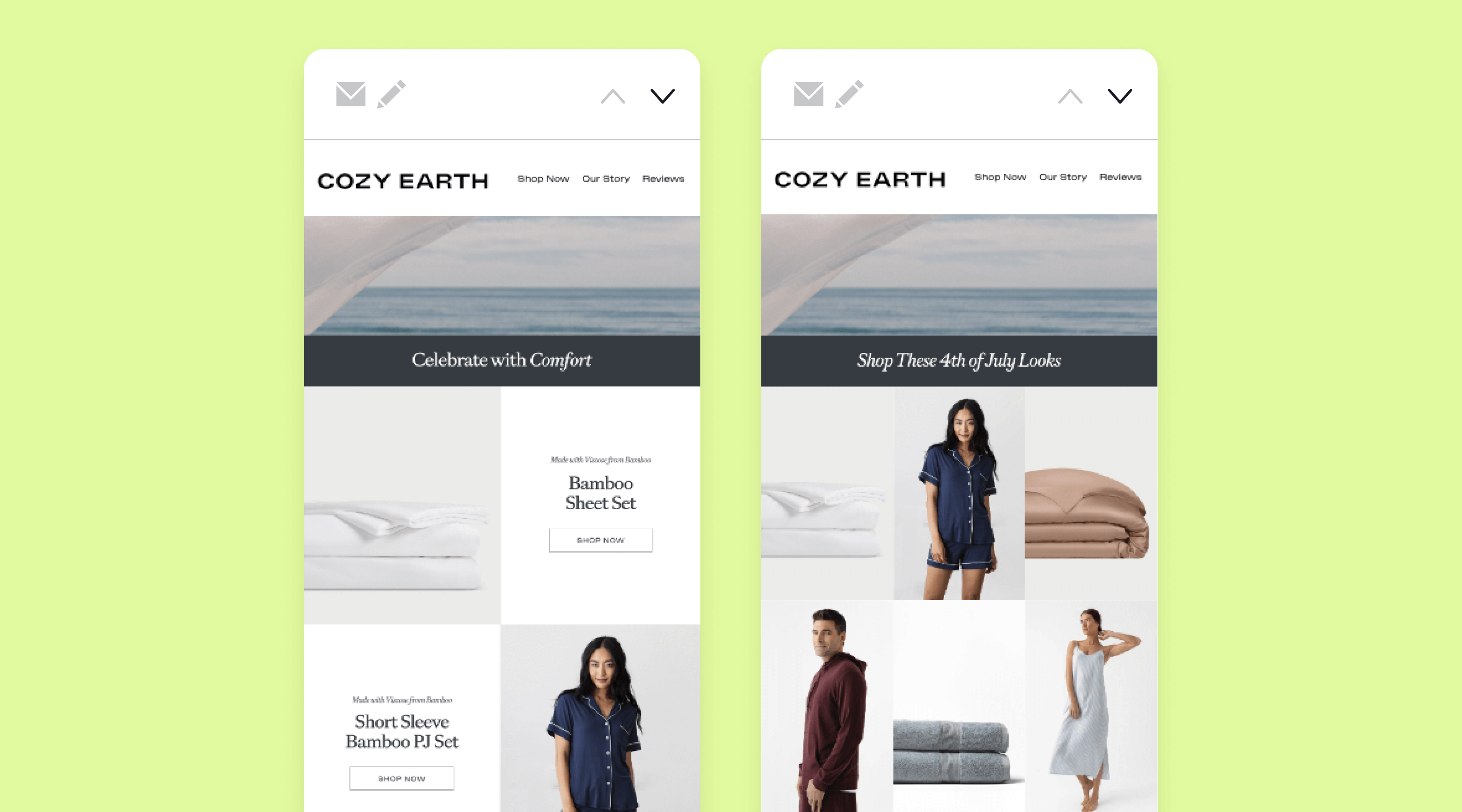

A/B testing the design of your email marketing campaigns can significantly impact user engagement and overall performance. This could involve testing different color schemes, fonts, layouts, image placements, or even the use of interactive elements like GIFs or buttons.

Testing these variables can provide valuable insights into what visually resonates with your audience. It's crucial to isolate one variable at a time for clear results and to ensure that each version is aligned with your brand's overall aesthetic to maintain consistency.

By A/B testing your CTAs, you can determine which approach motivates your audience to take action. This could involve testing different button colors, hyperlinked text versus buttons, varying the CTA copy (e.g., "Shop Now" vs. "Discover More"), experimenting with placement within the email, as well as the number of CTAs within an email.

These tests can reveal which language resonates most with your audience, and which type of CTA impacts click-through rates. By comparing the performance of different CTAs, you can gain valuable insights into what motivates your audience to engage and convert. These insights can then be used on other marketing channels to drive even better results.

A/B testing the send time of your email marketing campaigns can dramatically influence open rates and engagement. This could involve testing different times of the day, such as morning versus evening, or different days of the week, like weekdays versus weekends. Testing your email send time ensures your emails are seen and engaged with at the most effective moments.

Curious about the best time to send your messages during the busiest shopping holiday of the year? Check out our guide: When is the Best Time to Send SMS Marketing and Email During BFCM.

They say a picture is worth a thousand words, but we prefer pictures that are worth a thousand clicks. Imagery is a specific subset of content you can test in your email campaigns. This could involve testing various image styles, such as lifestyle photos versus product shots, or even comparing static images against animated GIFs.

A/B testing should be an ongoing part of your overall marketing strategy. Even when you find something that works for your brand, continue to test your campaigns and journeys regularly to make sure you’re always sending the most effective messages possible. Don't forget to download your A/B Testing Template to keep track of your tests.

Check out our Resource Hub for more tools, guides, and marketing tips.